Creating an in-browser augmented reality experience with a smartphone and cardboard – sounds awesome, doesn’t it? Here’s my thoughts along the bumpy road I traveled so far:

The Idea

A smartphone is capable of delivering VR experiences using a browser, WebGL, and a cardboard. If you add a stream from the back-facing camera to it, and do some object recognition on the incoming video data – then that would make a augmented reality setup, right? Way too exciting to not give it a shot…

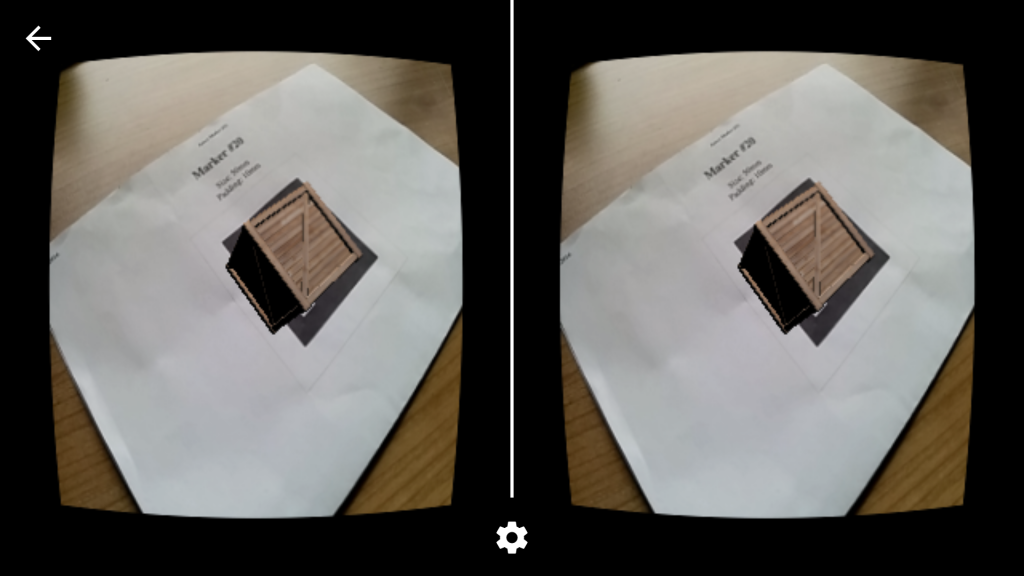

Augmented Reality for Cardboard style VR on a Nexus 5

The Downside of Cardboard Style VR

When comparing VR experiences using a cardboard device to using a full grown HMD, one issue immediately comes to mind: the missing lateral axis tracking. Take this experience for example: When you want to lean in to see more details, you’ll see that you can’t. This really breaks the immersion.

There have been concepts to outgo this limitation, like using the gyroscope to predict lateral movement, or using a webcam to track actual movement. However, none of these yield promising results.

The upside of Cardboard style VR

That said, smartphone all have one feature: a built-in front facing camera. And with today’s browser APIs, WebRTC in this case, it is well possible to read the camera stream. That opens up a whole new dimension, the world of augmented reality.

The most intriguing part of AR is that it doesn’t need rotational and lateral axis tracking to be immersive. When streaming real world camera data to the user, the impression arises that the device knows about the user’s position and rotation in space, while it actually doesn’t.

Thus, it is possible to outgo one of the larger limitations of cardboard style VR experiences – and there are other benefits as well. The browser API offer some nice options, among them speech recognition, allowing to interact with the scene without the need for tactile input.

Augmenting the reality

To actually augment the camera stream, object recognition is needed. It would be great to be able to recognize arbitrary objects, like a desk, or a human. Sadly, were not there. Yet. The solution that works today is special markers. Detecting the presence of a marker is not enough, there is also its distance and rotation that needs to be known. Having that information, it is also possible to deduct the user’s position and rotation in space – given the actual position and rotation of the market is known. But, as I said, to create an immersive experience, this is not needed.

Marker detection and pose estimation (calculating the marker’s distance and rotation) is not new to computer science, and there are quite a few papers out there regarding that topic. Actually, it is not possible to really calculate this information; it is all about doing efficient guessing. But, some algorithms have implementations, and there also exist ports to JavaScript.

Whipping it together

So, what needs to happen? Here’s the basic flow:

1) Capture the camera stream using WebRTC

2) Render the stream into a video element

3) Read image data from video and render it into a canvas to obtain pixel data

4) Send the pixel data to object recognition

5) Do a pose estimation based on object recognition and position a 3D Object according to the data

6) Draw a stereoscopic image of the camera stream into a canvas

7) Draw a matching stereoscopic image auf the augmentation into a canvas

All steps except the first need to be taken for each frame. As a high frame rate is essential for a comfortable experience, all those steps need to be finished in less than 16ms. That’s really not that much time… Especially, since that list only covers the basic setup; in a real-world example, additional time is needed to perform computing and drawing the actual augmentation. Performance-wise, I found it especially annoying that there doesn’t seem to be a shortcut from step one to step three. For the augmentation, I used an Aruco marker and placed a wooden box where it was detected.

The Implementation

Steps 1 to 3 are homegrown and pretty straightforward. Except, well, making my nexus stream the back-facing camera was hard to get right, and I had to resort to the MediaStreamTrack.getSources() API to make it happen. For steps 4 and 5 I used the js-aruco library. For steps 6 and 7, I tried different approaches:

My first idea was to take the pixel data from the camera stream and use that for a texture for a plane geometry. I placed that plane somewhat back in the scene. The whole scene is then piped through the webvr-boilerplate, which takes care of applying distortion, FOV and the generic IPD.

The second idea was to draw the camera stream onto two distinct 2D canvases, with some offset to cope with IPD, and then. draw the 3D scene in a transparent canvas on top of it. I did not pipe the whole scene through the webvr-boilerplate, but instead used the THREE.StereoEffect(), which nicely handles scissor testing.

The Result

To put short: Highly unsatisfactory for three reasons:

1) Performance is very poor, framerate is way too low

2) Getting the 3D scene (i.e. the box) in sync with the background plane is incredibly hard

3) Camera stream is monoscopic

While the first two issues certainly can be overcome, the third one is a show stopper. There is only one camera, only one angle. The thing is, at around 20 meters distance in, the two angles our eyes provide produce approximately the same image. But, the marker that I detect is no more than a meter away. While looking at nothing but a plane surface, that is ok. As soon as there are other objects – be it your hands, or a glass on the table – the brain (rightfully) complains about the two images presented to the eyes do not match.

I do not give up on the idea just yet – but, it seems too hard to get right at the moment. The code for all this is on the GitHub, just in case you might want to meddle around with it. If you have a Nexus 5 and a cardboard, you can also try out the experiment over here.

i love the idea. here is my last attempt at augmented reality within the browser. it doesn’t handle the stereo needed for the google cardboard, but it is a good starting point i think. https://github.com/jeromeetienne/threex.webar

Another intersting point may be to try jsartoolkit, artoolkit compiled to javascript. it may provide better marker localisation https://github.com/artoolkit/jsartoolkit5

i would love to solve the issue on the google cardboard :)

Saw your threex.webar approach and accompanying post – your dancing Hatsune was the inspiration for this! :)

The jsartoolkit port looks very promising, thanks for the pointer – I’ll give it a try :)