This is something I wanted to see happening for a long time: Put your smartphone in a cardboard-style HMD, and have a VR experience that allows you to turn, kneel and stand, walk around… without being bound by cables. That kind of experience that is now called “room-scale VR”.

That means having a VR experience without having to buy expensive VR hardware, and without going through setup hell (it took me days to be able play Elite on my Oculus DK2 again after I updated the SDK and Horizons to latest). I want to see something that is available to (almost) everyone. Today. Plus – of course – I want all of this to happen from within a browser.

I have a specific scenario in mind:

A couple of people enter a room, say in a museum. An employee tells them a URL and hands out cardboard-like HMDs to put their smartphones in (remember, they’re cheap). The visitors navigate to the URL on their phones, insert them into the HMDs and put them on. Then, they have a shared, room-scale VR experience.

Question: Could it be possible? With today’s smartphones?

I asked around on Twitter, and response was rather pessimistic. Just adds to the challenge, right?

The Concept

What is currently missing to do room-scale VR, is tracking lateral movement, i.e. the HMD’s position. Rotational tracking is available through gyroscope and accelerometer sensors, and the sensor data is ok-ish; good enough to work with it, at least. But the data is not good enough to track lateral movement.

However, we still have the camera on the phone. In my previous experiment, I wanted to use the camera stream and barcode markers to create a mixed reality experience. But for this, I decided to use the camera stream not to show it to the user, but to leverage it to track lateral movement.

Tracking HMD Lateral Movement using Camera Stream and Marker Detection

Marker detection libraries for AR, when detecting a marker, offer a transformation matrix that describes where the detected marker is (and how it is rotated) – relative to the camera. In short: Given a known position of the camera, the detection library gives an estimate where the marker is. Now, let’s just turn that around: Given, we know where the marker is, we can use the detection data to get an estimate where the camera is. Bingo.

So… we need to place 2D barcode pattern markers on the surrounding walls (floor, ceiling), use the built-in environment facing camera of the phone to stream camera data to the app, and analyze marker detection results. When a marker is detected, we use it’s position as point of origin, apply the inverted transformation matrix to it, and voila: you now have data that describes where the camera is (or, to be precise, where it thinks the camera is).

Results

It works™.

Really, the results are more than promising.

I placed three markers on the walls and, instead of constantly running around with my phone, I took some videos with my phone and did the experiment with that pre-recorded data instead of a live stream. The data I obtained from the detection library was, well, shaky, to say the least. But, using some very simple and crude math, I could already get some meaningful values out of them.

As long as two markers are visible, things go pretty smooth. When only one marker is left, things get a little more fragile. When no marker is in sight (or, in sight but bot detected) – darkness, of course. Prerequisites for this approach would be to make sure there is always at least one marker visible, there are acceptable lighting conditions, and the camera needs to be ok enough to not screw up things, e.g. by wildly trying to autofocus around.

If one would use some more intelligent math, and also take the rotational data into account, one could get some very stable and reliable predictions about the camera position, I am sure.

See it in action

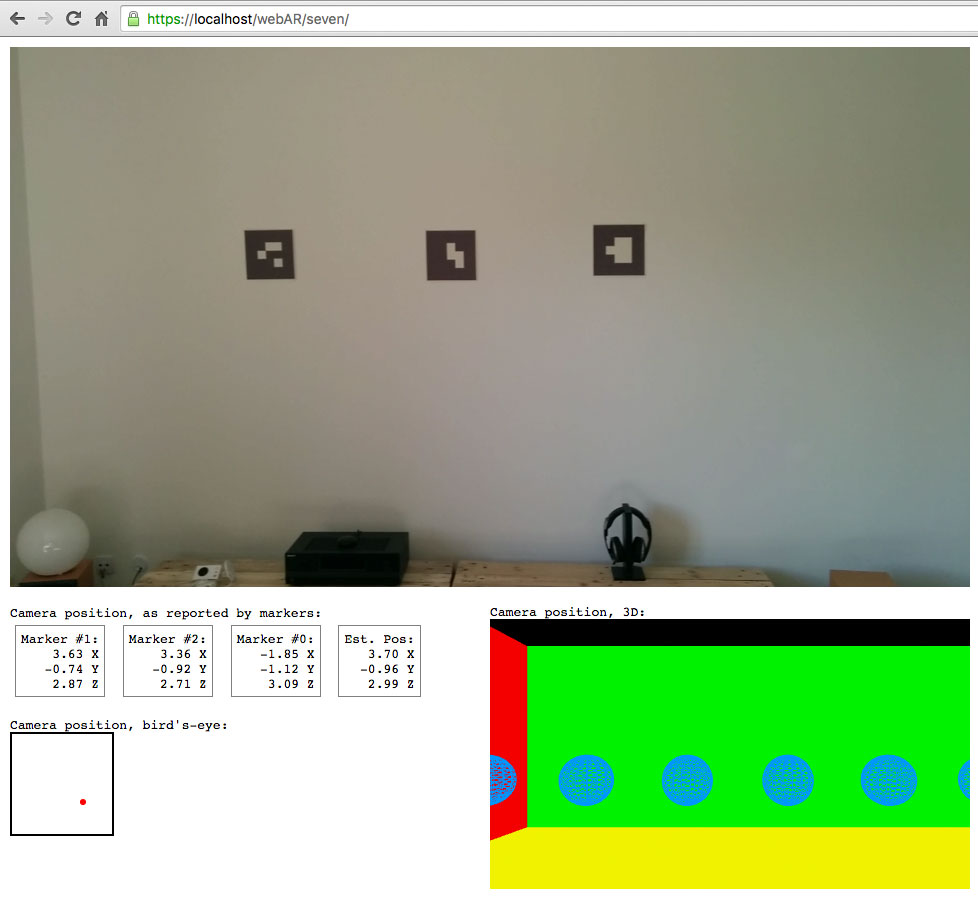

The experiment is available on GitHub. It plays the video in a loop, while the position estimation code is running. Below the video to the left are the numbers I’m getting from the detection library after applying the inverted matrix, and the resulting estimated position. Below that is a bird’s-eye view, showing the X and Z axis. To the right is a 3D scene where the estimated position is applied to the camera, to visualize position including Y axis movement.

Some Code

If you’re interested in code and implementation details, read on. If not, just skip this section. The source of this experiment is available on GitHub.

Opposed to my previous experiment, I used another pattern detection library this time: The JS port of ARToolkit. I found it to be more robust, and it is way faster (probably because it’s an Emscripten port).

I printed three barcode markers that should be easy and robust to detect, and pinned them to a wall. Next, I took a couple of videos (under good lighting conditions) with my good old Nexus 5 phone, trying to avoid rotational movement (not as easy as it sounds). Then it was time to start implementing.

1. Defining marker positions

The marker objects have their id as key. I store their initial position, relative to the camera, and some helper instances.

// left to right: 1-2-0

var existingMarkers = {

1: {

matrix: new THREE.Matrix4(),

object: new THREE.Object3D(),

spectator: new THREE.Object3D(),

initialPosition: new THREE.Vector3(-2, 0, 10)

},

2: {

matrix: new THREE.Matrix4(),

object: new THREE.Object3D(),

spectator: new THREE.Object3D(),

initialPosition: new THREE.Vector3(0, 0, 10)

},

0: {

matrix: new THREE.Matrix4(),

object: new THREE.Object3D(),

spectator: new THREE.Object3D(),

initialPosition: new THREE.Vector3(+2, 0, 10)

}

};

2. Applying the matrix

When a marker is detected, get it’s id and see if we know about it:

var markerInfo = arController.getMarker(i);

var id = markerInfo.id;

markersVisible.push(id);

var markerItem = existingMarkers[id];

if (!markerItem) {

return;

}

Get the marker’s transformation matrix:

var markerRoot = markerItem.object;

if (markerRoot.visible) {

arController.getTransMatSquareCont(i, 1, markerRoot.markerMatrix, markerRoot.markerMatrix);

} else {

arController.getTransMatSquare(i, 1, markerRoot.markerMatrix);

}

markerRoot.visible = true;

arController.transMatToGLMat(markerRoot.markerMatrix, markerRoot.matrix.elements);

Apply the inverted matrix to an object having the marker’s initial position (the “spectator”, i.e. the potential camera position):

markerItem.matrix.getInverse(markerRoot.matrix); markerItem.spectator.matrix.identity(); markerItem.spectator.matrix.setPosition(markerItem.initialPosition); markerItem.spectator.applyMatrix(markerItem.matrix);

Now read back the calculated position (only lateral, no rotation) and store the data.

var pos = markerItem.spectator.position;

sensorData.push({

x: pos.x,

y: pos.y,

z: pos.z

});

3. Normalize the data

What we now get is a set of numbers that behave flaky and shaky. Turning those into usable meaningful numbers probably is a very easy task – for someone with a background in math or working with sensor data. However, I did some googling and went for stupid-simple 3-step approach: First, skip values that are too far off and are obviously falsy. Next, take the medium of the values that are left over. Finally, apply a super simple low-pass filter. The code looks like this:

// artifact rejection

if (frameCount > 120) {

var artifactThreshold = 1.9; // 1.7;

for (var sensor = 0, sensorCount = sensorData.length; sensor < sensorCount; sensor++) {

var sensorValue = sensorData[sensor][axis];

var lastValue = estimatedPosition[axis];

if (Math.abs(lastValue - sensorValue) > artifactThreshold) {

sensorData[sensor][axis] = lastValue;

}

}

}

// obtain medium

sensorMedium[axis] = 0;

for (sensor = 0, sensorCount = sensorData.length; sensor < sensorCount; sensor++) {

sensorMedium[axis] += sensorData[sensor][axis];

}

sensorMedium[axis] /= sensorData.length;

// apply filter

estimatedPosition[axis] = smoothen(sensorMedium[axis], estimatedPosition[axis]);

The crude low-pass filter I used:

function smoothen(newValue, oldValue) {

var ALPHA = 0.07; //0.05;

oldValue = oldValue + ALPHA * (newValue - oldValue);

return oldValue;

}

And that's it – that is the data I'm visualizing.

Next Steps

I am quite excited about the results of this experiment; this all looks very promising. However, it would be sweet to have the following:

- Assemble a demo that does a live analysis of the camera input stream.

- Find out how to incorporate rotational movement.

- To enable other people actually try this out, there needs to be some kind of UI or configuration file describing the marker positions.

- For a shared VR experience, devices should expose their position to other devices – WebRTC’s DataChannel sounds like a good thing here.

I hope I’ll find some time soon – I can sense some serious awesomeness there!